Only 16% of companies in the world highly depend on digital technologies to run their businesses, while the rest are hybrid-digital and non-digital models.[i] The COVID-19 pandemic has changed all that and has forced companies to finally embrace digital transformation.

Digital transformation is the process whereby businesses adopt new technologies and fundamentally change the way they deliver services to customers. Namely, digital transformation is how enterprises integrate digital technologies into business operations.

Because of the lockdown policies and work-from-home guidelines from Covid-19, many workforces have had to shift their operations to the digital world in order to maintain stability/profitability. This unprecedented and unpredictable crisis has shown the world just how important technologies are to modern businesses. Enterprises now have to rethink their digital transformation strategies and the pace with which to roll them out.

But the barriers for companies to rapidly overhaul their business environments are many. No clear strategies and budget shortfalls are the two biggest challenges most companies face. Yet many businesses are taking the leap since the pandemic leaves them no other options.

Levi’s is an example of a company that has successfully reduced losses by accelerating their digital transformation. They automated most of their logistical processes, deployed an omni-channel e-commerce and delivery system, and invested heavily in data and artificial intelligence, thereby keeping them afloat. By strengthening their connection between digital technologies and operations has been beneficial for both customers and employees.

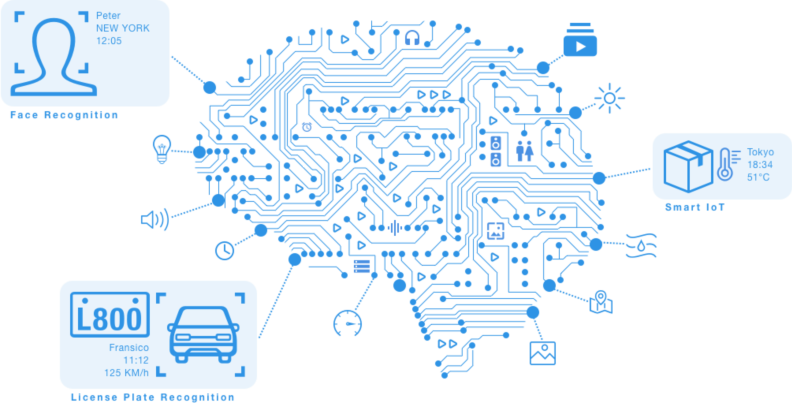

It’s not just retail—factories, hospitals and governments can also take an advantage of transforming digitally. To embrace a data-driven future, the following technologies are the key trends which we’re already seeing help people adapt to the new normal:

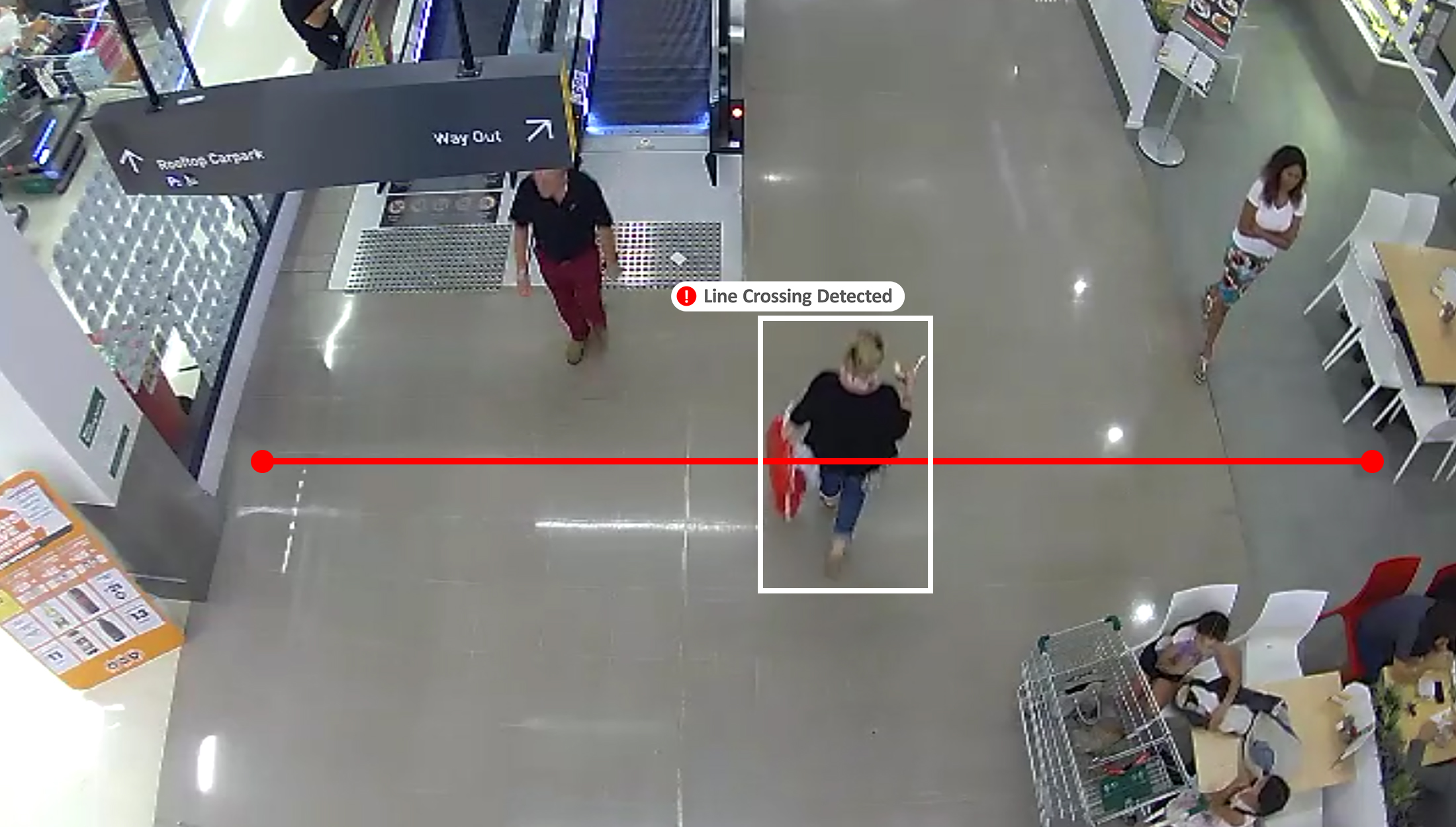

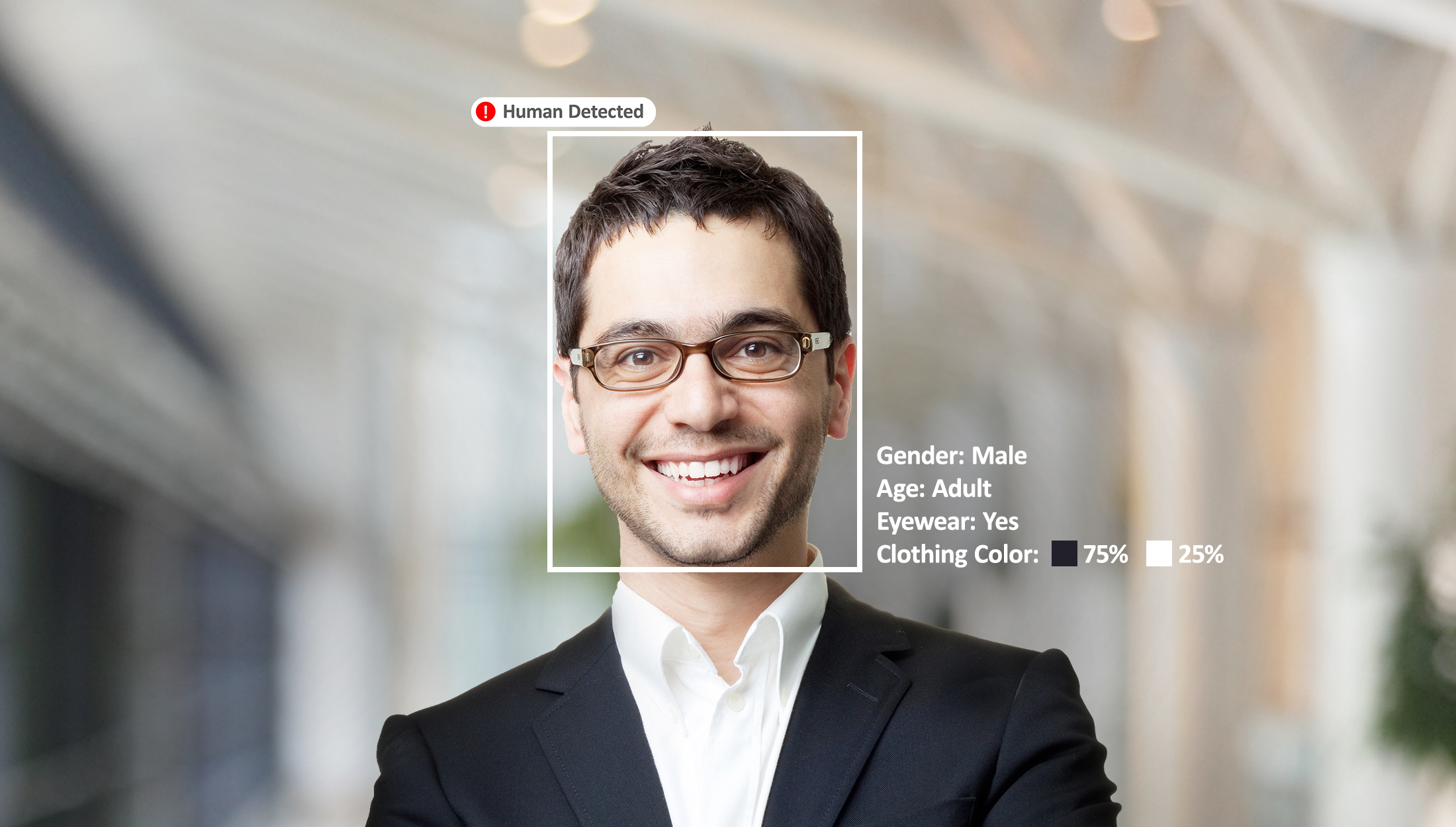

Video IoT

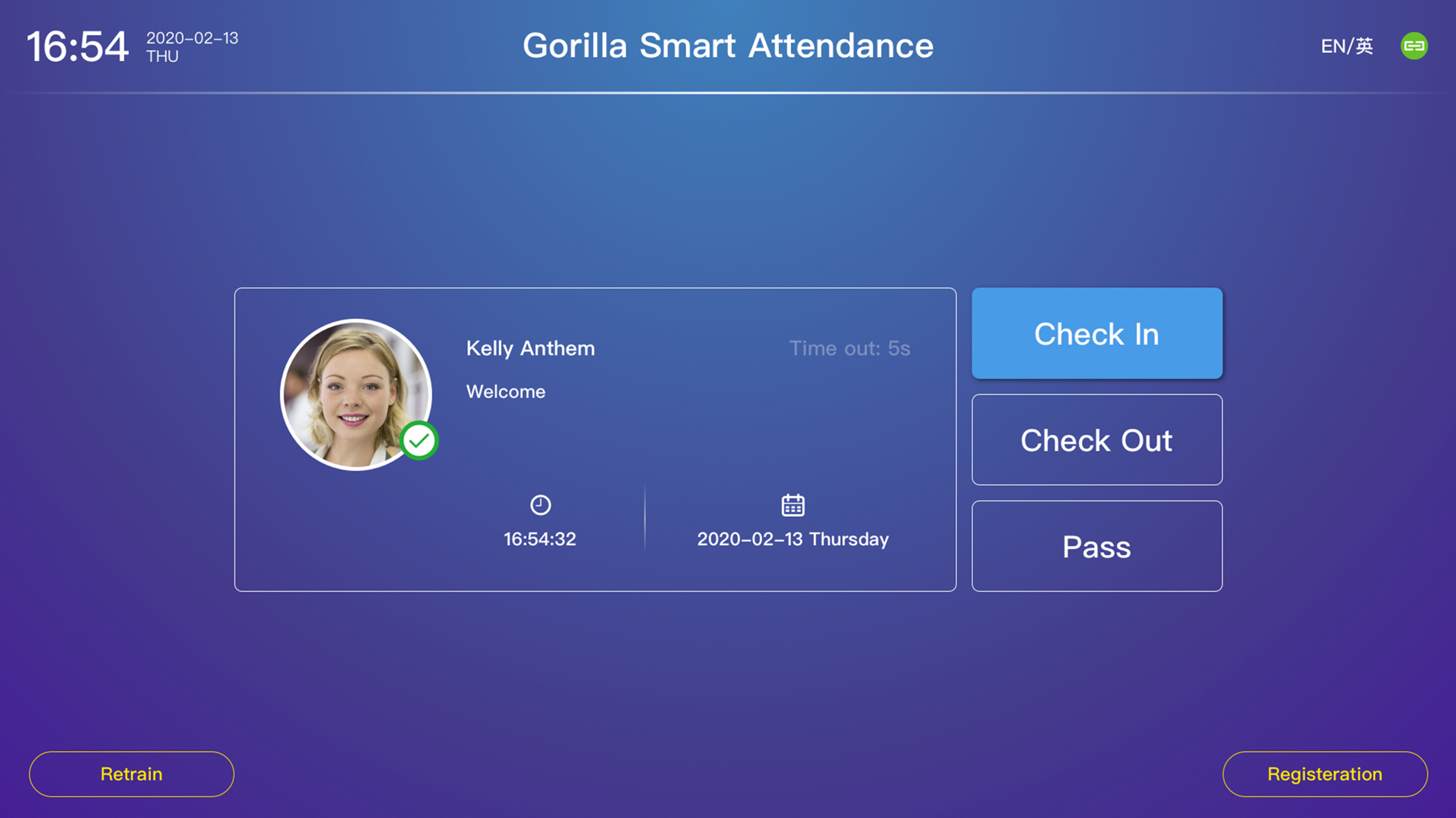

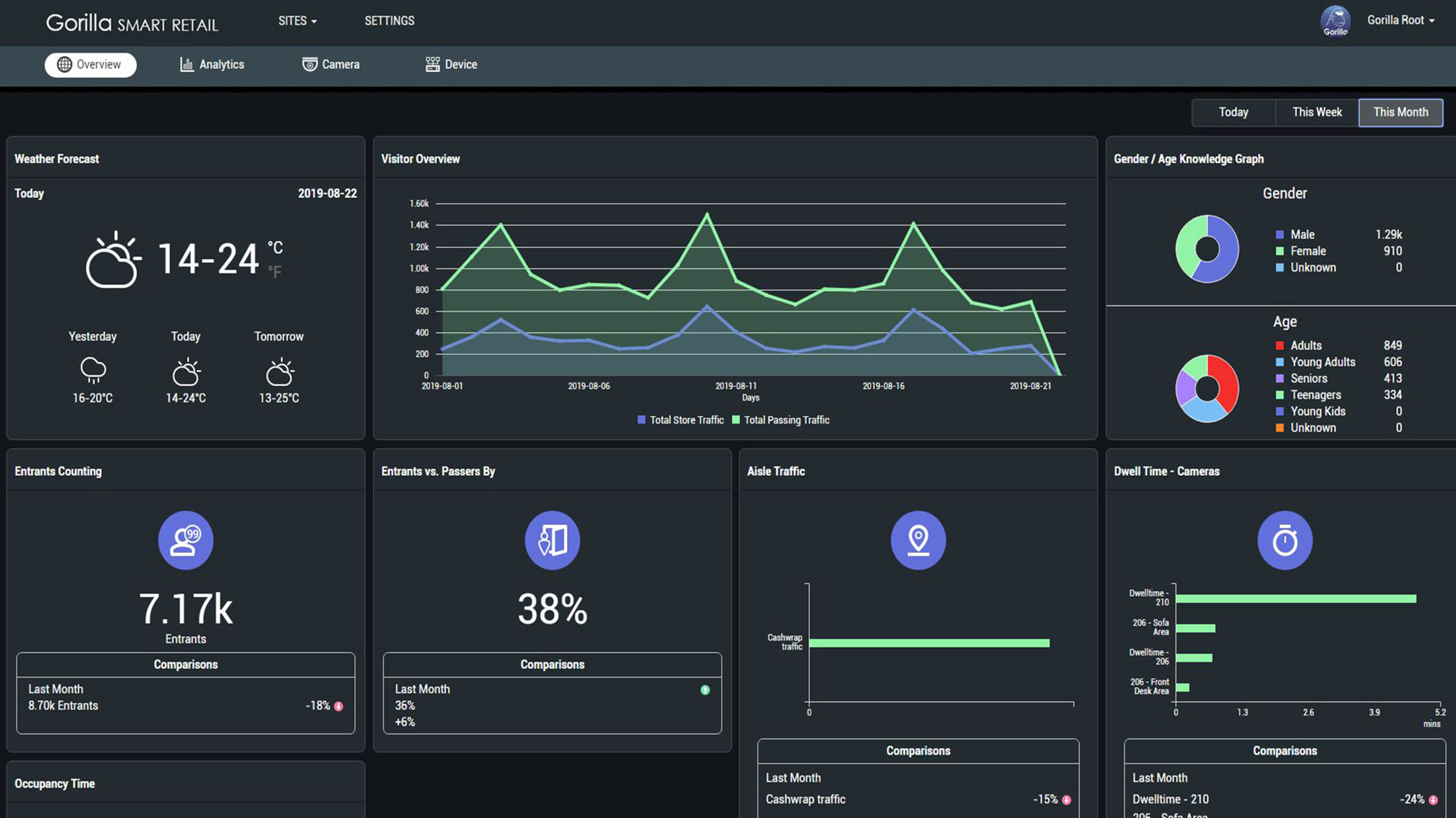

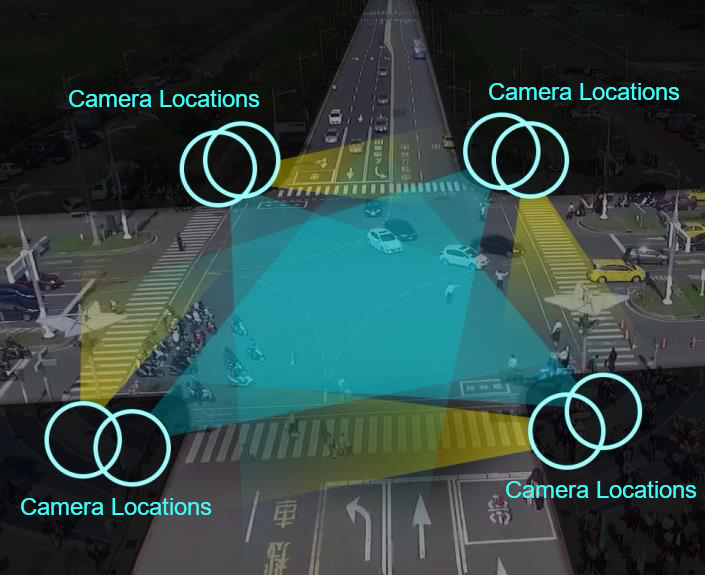

• Video analytics helps to enhance the authentication systems (using facial recognition for touchless entry) • Additional functions are being released, such as mask & temperature detection or crowd density detection to follow Covid-19 regulations.

Cybersecurity

• As enterprises embrace digital transformation, their data needs to be saved and shared safely. Cybersecurity solutions help organizations to avoid hackers and to ensure the internal and external networks are well guarded.

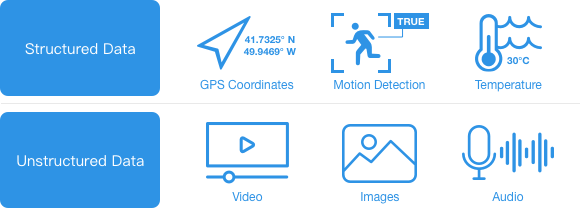

Big Data

• By transforming unstructured data into structured information, big data optimizes and accelerates data analysis much better than before. Big data solutions can collate disparate information and allow organizations to make more informed decisions.

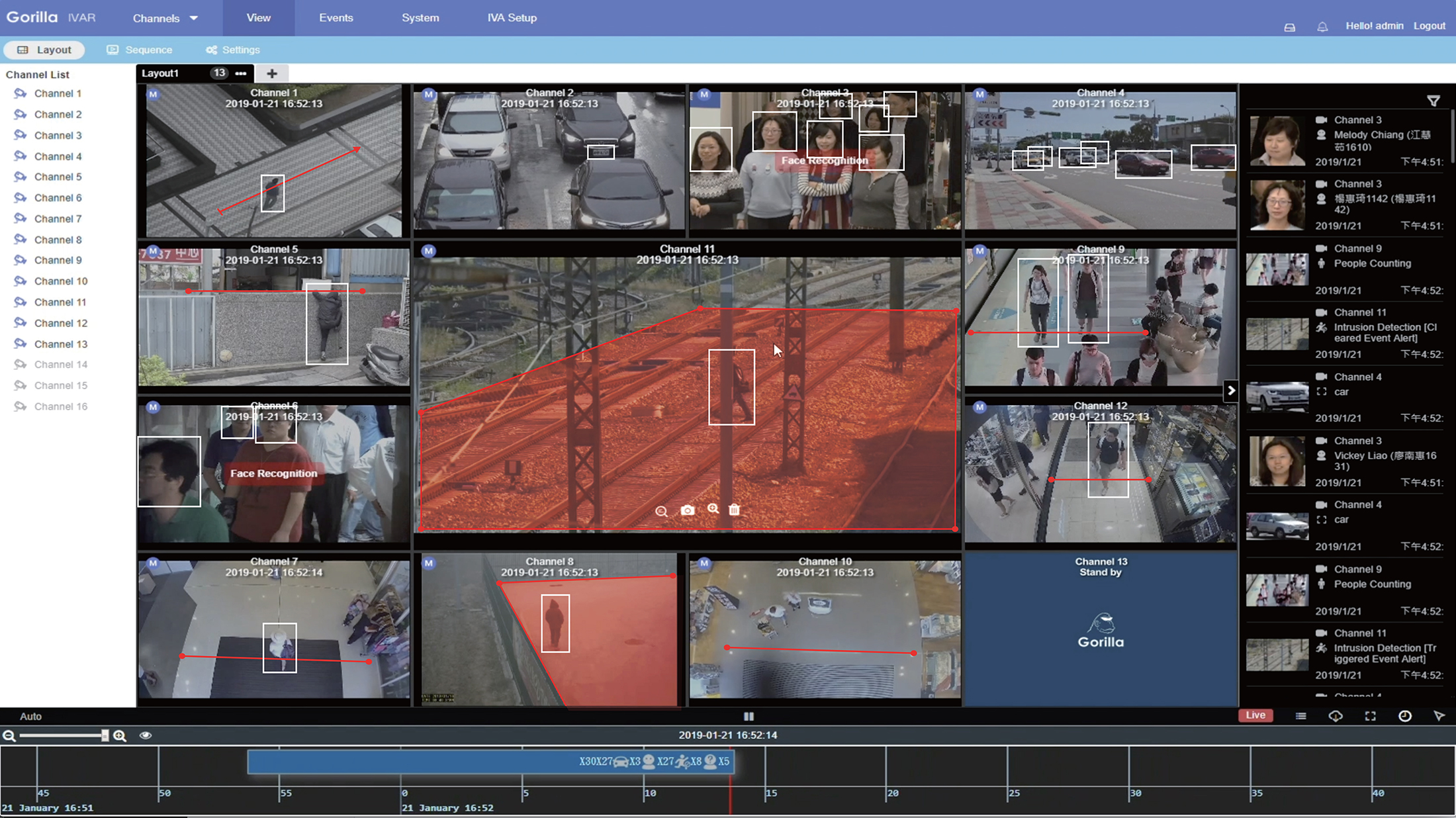

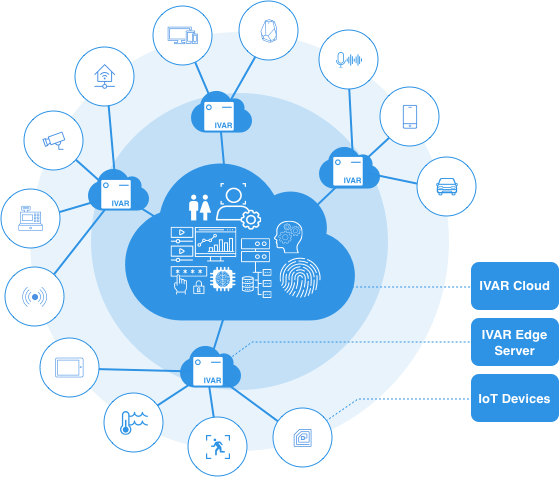

During this challenging time, companies should stay open to digital transformation. Not only the pandemic but also the development of Edge AI/computing are pushing companies to speed up their plan of digital transformation. Gorilla who has specialized in edge computing can really help organizations easily transition and make operations smoother in the long-run.

Click here to learn more about Gorilla’s post-pandemic solutions: https://www.gorilla-technology.com/IVAR/Post-Pandemic-Area-Management

[i] Harvard Business review

https://hbr.org/resources/pdfs/comm/microsoft/Competingin2020.pdf