What is Video Analytics?

Picture this, you’re in a crowded train station and have lost your friend in the mix. How do you and your brain go about picking your friend out of the crowd? Do you go through the same process each time you look for something or does it depend somewhat on what you’re seeking? From a human perspective, looking for stuff seems rather straight forward and although we can describe those processes easily to others, the way we search for and identify things generally differs and depends on what we’re searching for. How one goes about finding a lost friend in a station is different than searching for your keys before going to the office.

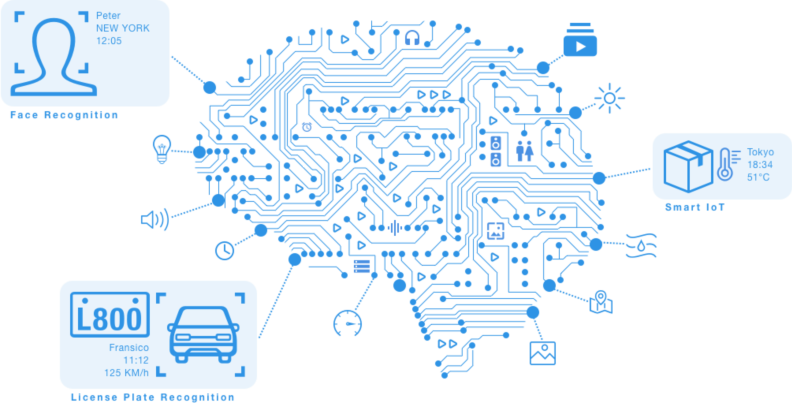

Now imagine how we might go about getting computers to look for things. They would need some kind of input to detect specific objects, recognize and differentiate between those, and then notify us somehow when the requested result is found or not. This process is what we call intelligent video analytics, IVA for short.

This article will go into how different kinds of IVA work and also give some examples along the way.

What Video Analytics Does

The processes involved in getting IVA output from software is similar to how people visually detect and recognize things. The essence of what video analytics does is generally described in three steps.

- Video analytic software breaks down video signals into frames. This article will not describe this step, but understanding digital video and how it works is an interesting topic and good to know before we break down the next steps.

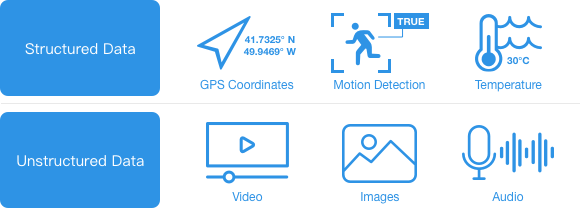

- The software then splits the video (frames) into video data and analytic data, then uses algorithms to process the analytic data to output specifically desired functions.

- And finally, it delivers the result in a predetermined manner.

Approaches to Video Analytic Processing

Let’s get into the details for number two from the above list as it’s what most people have been talking about recently.

Depending on the goal, video needs to be processed using different methods in order to deliver relevant results. Gorilla has categorized the most widely used types of analytics into five fundamental IVA groups which are described in more detail below.

1. Behavior Analytics

These analytics use algorithms that are designed to look for a specific behavior.

Thinking more deeply, a behavior could be defined as action over time. With that in mind, each Behavior Analytic needs more than one frame from the video to determine if an event or behavior has occurred. So it follows that the algorithms in Behavior Analytics look for changes from frame to frame over time to identify a very specific and predefined event or action. We’ve broken down and classified the Behavior Analytics that are used in our solutions here:

People Counting

The People Counting IVA does just that, it detects and counts people for a specified amount of time as they enter a zone and/or cross a line which users define in the software.

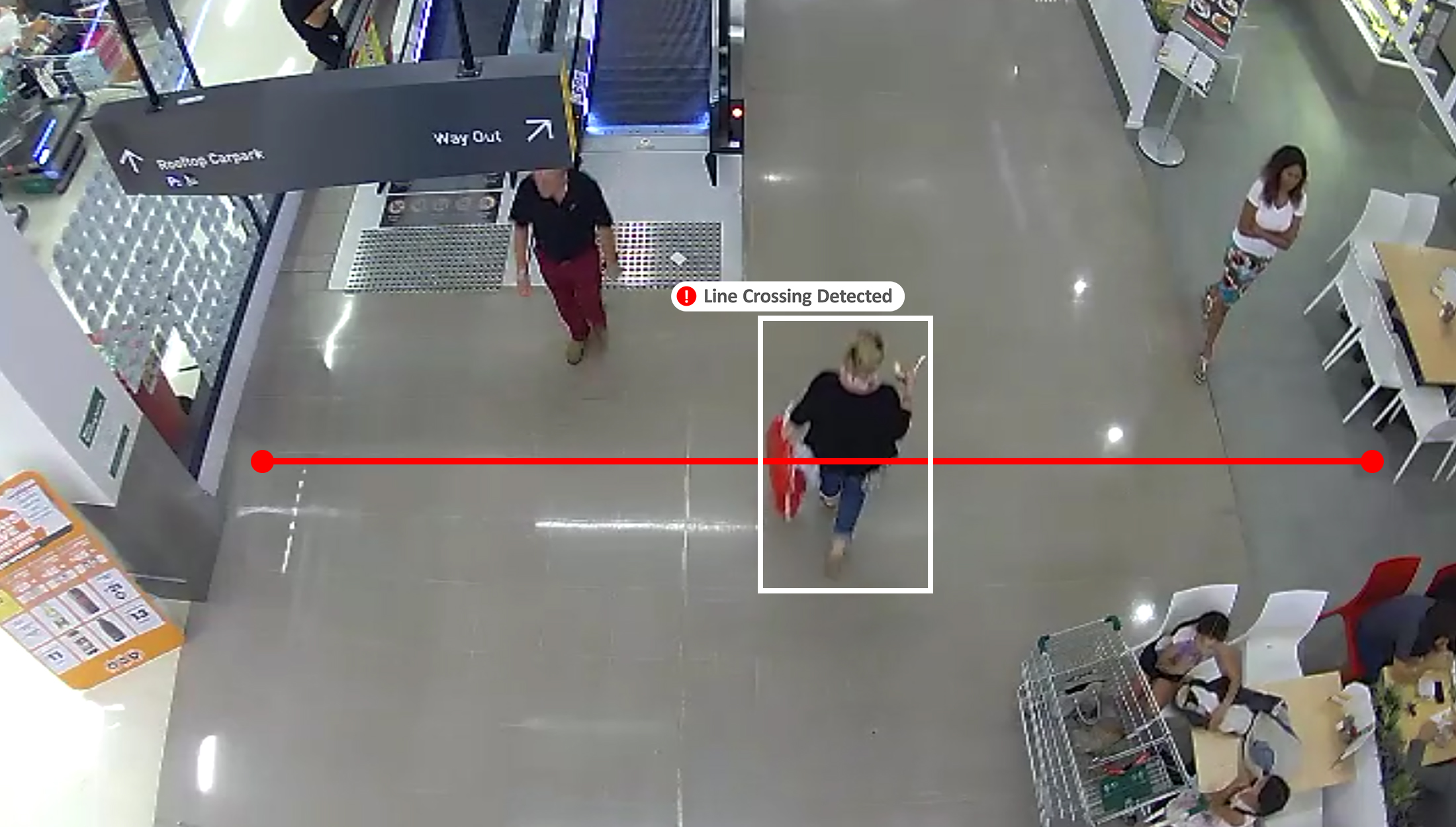

Line Crossing

This IVA detects when people cross a line (or lines) of user defined length and position.

Intrusion Detection

Intrusion Detection monitors user created zones to detect any activity or entries by moving objects (like people).

Direction Detection

This IVA monitors a user created zone for people moving A) within the zone AND B) in the marked direction. Movements in the opposite direction do not trigger an alert.

Direction Violation Detection

Same as the direction detection IVA but detects and alerts to movements in the opposite direction. As an example, security checks at airports and other transportation hubs stand to benefit from this type of IVA.

Loitering Detection

The Loitering Detection IVA monitors figures or people entering and then remaining in a user created zone for a specified period.

2. People/Face Recognition

People and Face Recognition could easily be sliced into two core groups, but we keep them as one since they are so closely related. As Behavior Analytics need to detect human shapes to perform effectively, People/Face Recognition IVAs are next on our list.

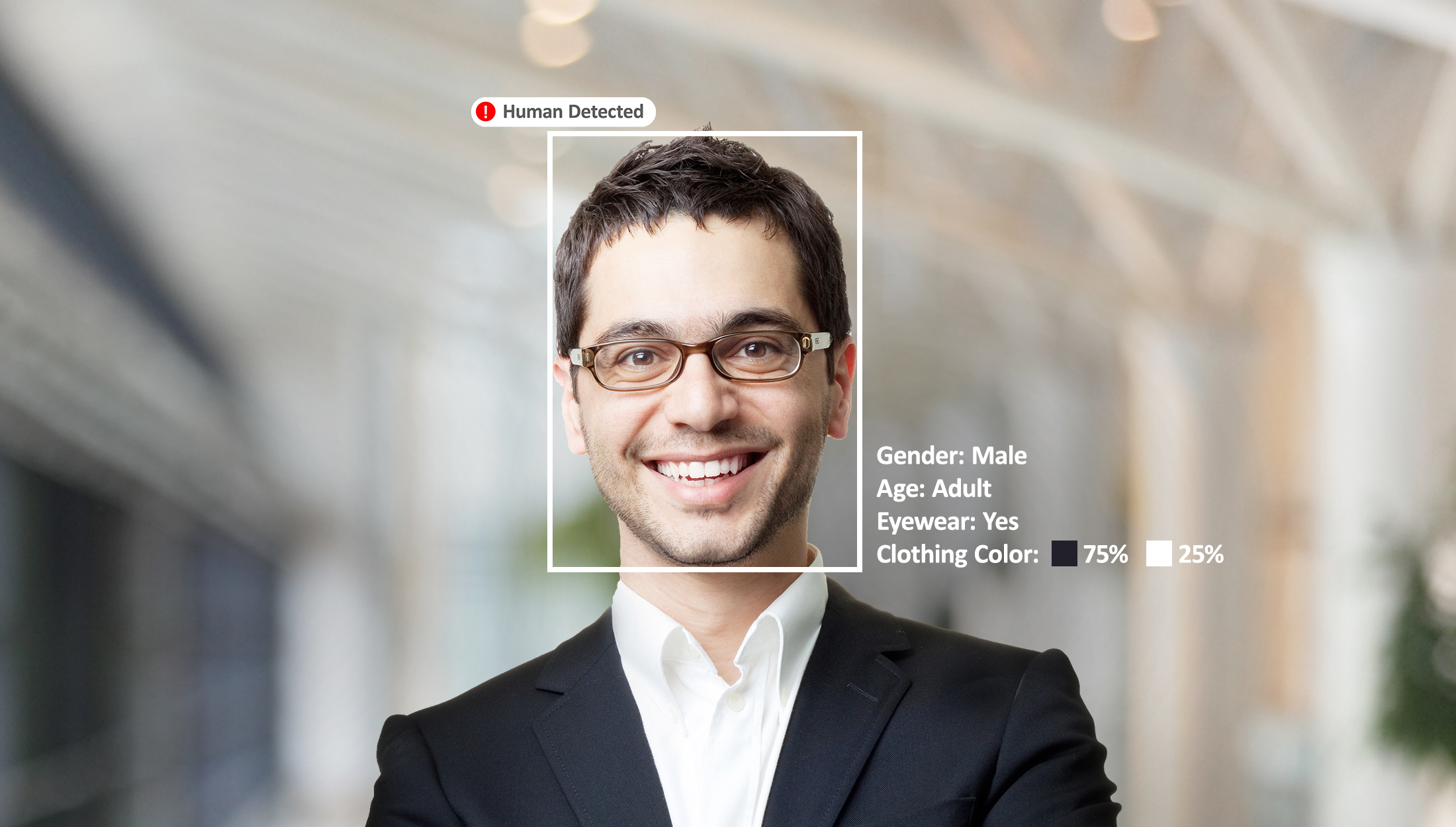

Human Detection

The Human Detection IVA detects human figures within the video. Once detected, features like clothing color, gender, eyewear, masks, and age group can be detected as well.

Face Recognition

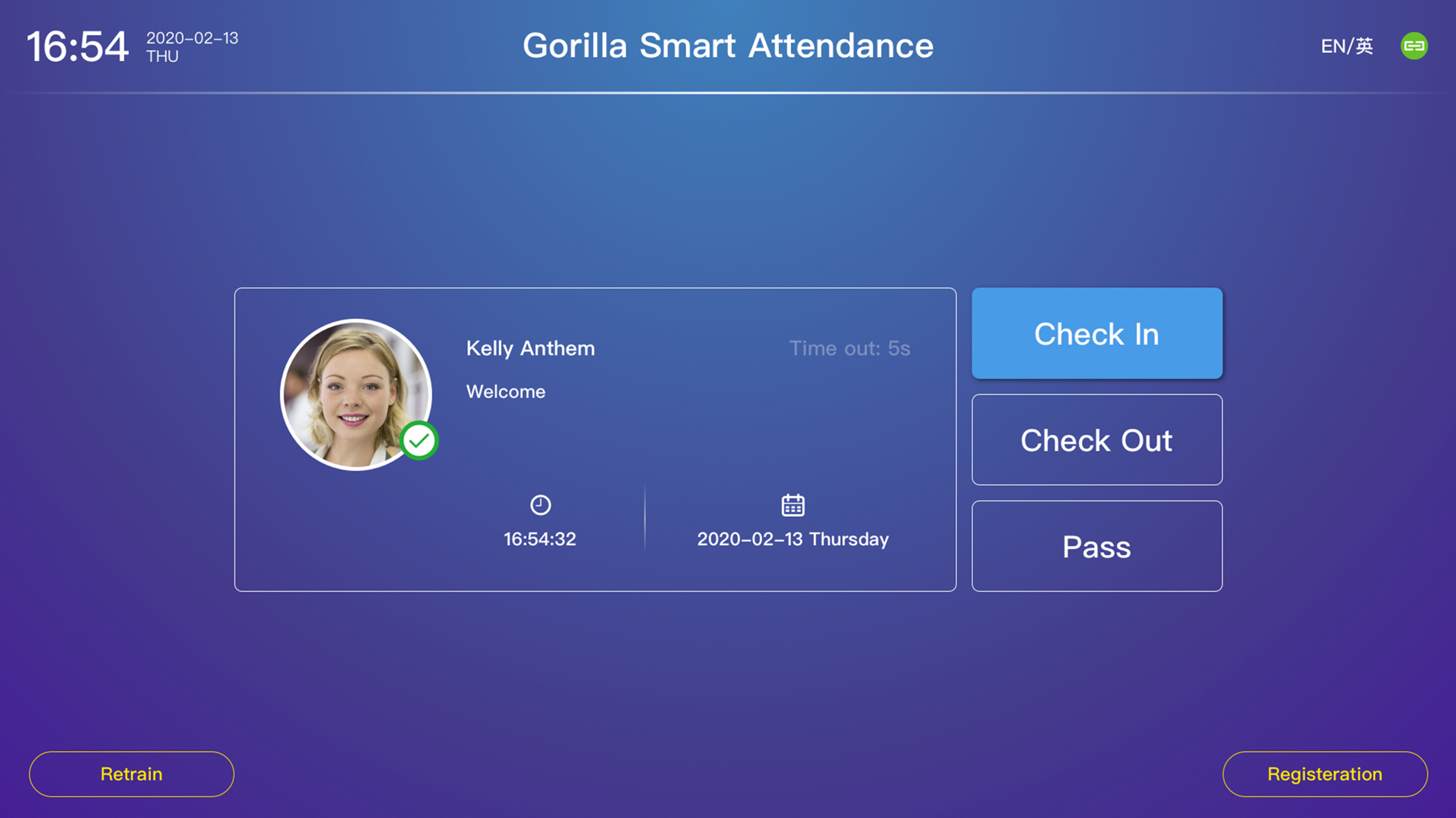

This IVA recognizes and identifies faces. This is used in conjunction with Gorilla’s BAP software and its facial recognition database. While uses for this are myriad (and often in the news), we most often see Face Recognition used for Watch Lists, VIP identification, Attendance Systems, and Black Lists.

3. License Plate Recognition

Some people collect license plates and like them because different places have different plates. However, this variety makes it incredibly difficult for one License Plate Recognition (LPR) IVA to work globally (or even just from state to state). Currently, we generally see this IVA added as a customized feature because adding all the different and beautiful plates into the general release of the software would require too much space.

Having said that, there are currently two approaches to LPR.

- Parking LPR detects the license plates of parked vehicles in user created zones, vehicles travelling slowly, or vehicles stopped at boom gates.

- Road Traffic LPR detects the license plates of moving vehicles, or vehicles stopped at a stoplight.

4. Object Recognition

Replace the Face Recognition IVA with any given object and you’ll get the Object Detection IVA. This is where algorithms are used in training the software to detect and recognize a specific object, like a hot dog. There are a lot of different objects in the world (even more than there are license plate types!), so the training and size requirements add up quickly.

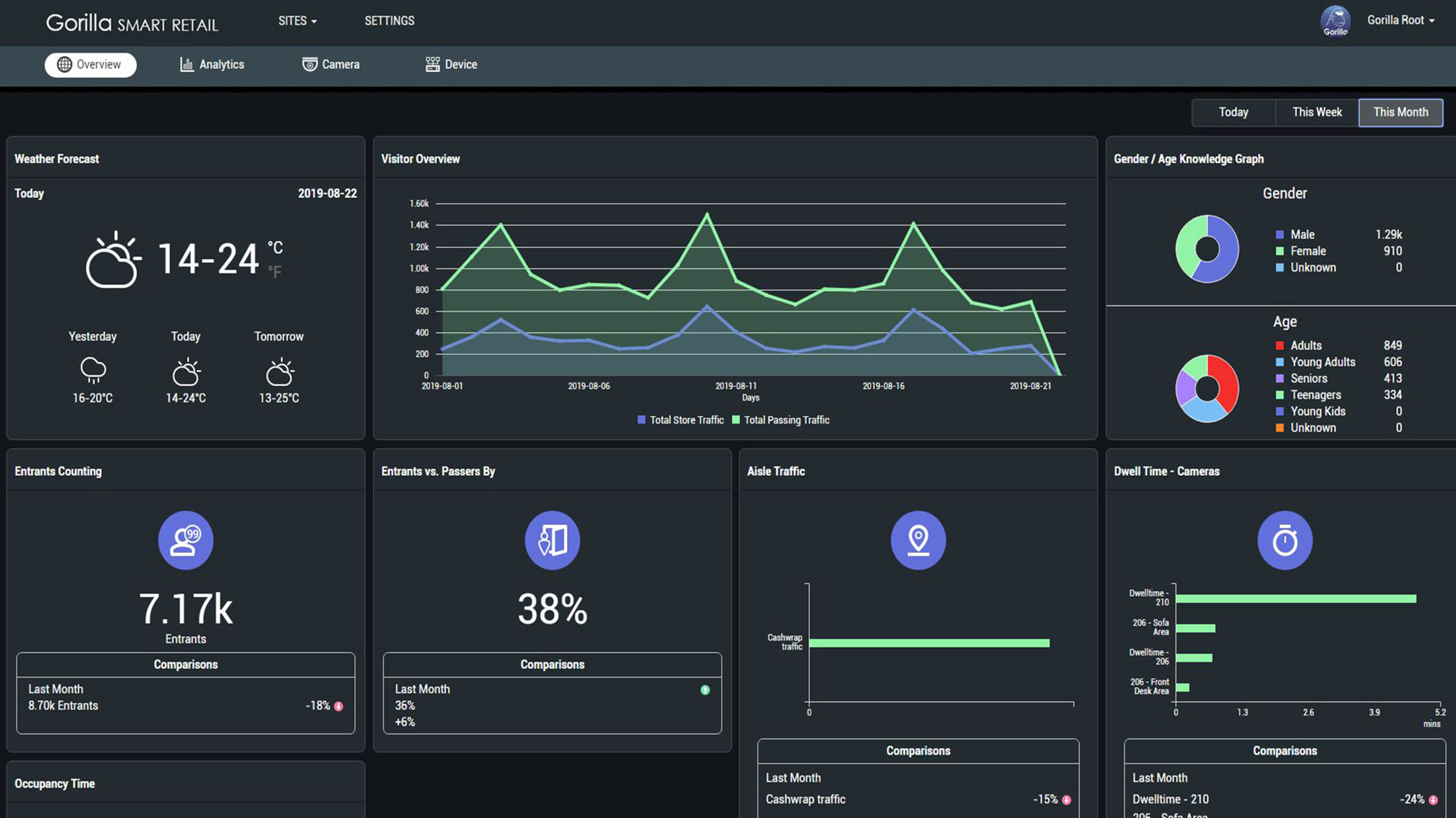

5. Business Intelligence

Dashboards in software showing data about various business activities are a valuable asset in just about any retail or enterprise setting. Using video analytics from within a dashboard to enrich and increase results should be a part in everyone’s toolbox.

While the IVAs in numbers one through four above are widely used for surveillance scenarios, there are a magnitude of business scenarios that can reap the benefits that video analytics offers. To see some great examples, check out how Gorilla is applying these to create intelligent solutions for multiple business markets and industries.

Putting the Video Analytic Idea Together

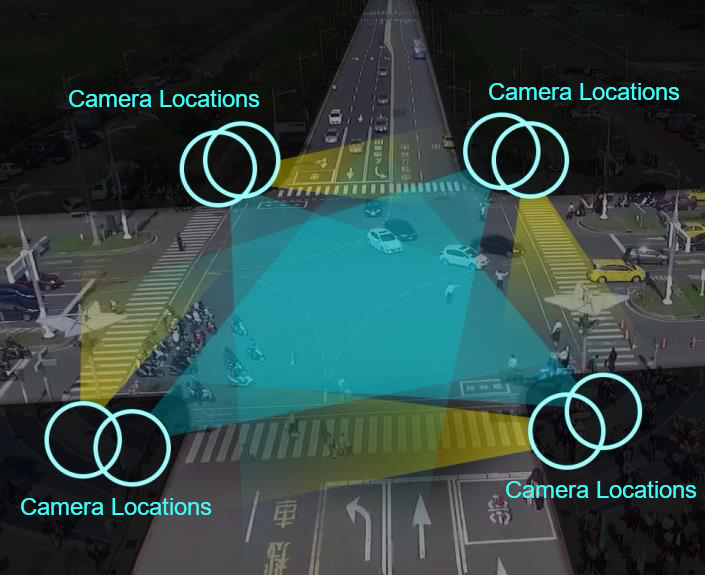

As you read above, these IVAs all orchestrate various algorithms to achieve and deliver results. Essentially though, IVAs detect for and determine if a defined event or behavior has been found or occurs within a video camera’s field of view and then notifies the designated user of the finding.

In a similar manner, most of us go through varying processes depending on if we’re searching for keys at home or for our friend in a busy station.

Video Analytic Processing Power

Thinking about the entire process, could there be a single solution that can do everything effectively? It seems like an insurmountable amount of tasks: from processing each single frame’s analytic data to displaying it together with the video, into creating a complete video system with an array of user selectable & customizable IVA in a building or any other scenario, all the way to putting multiple systems together that report back to a central control center.

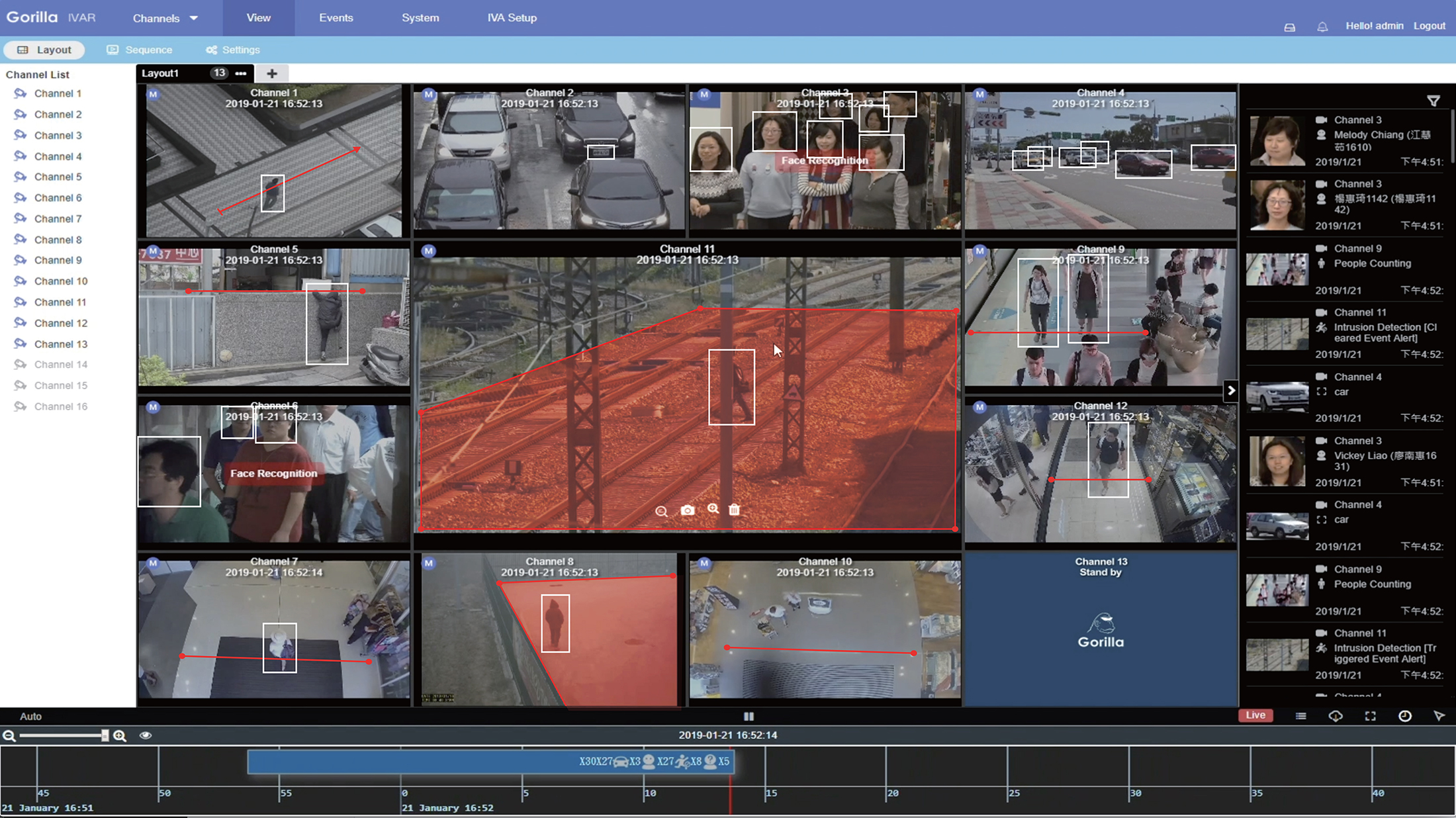

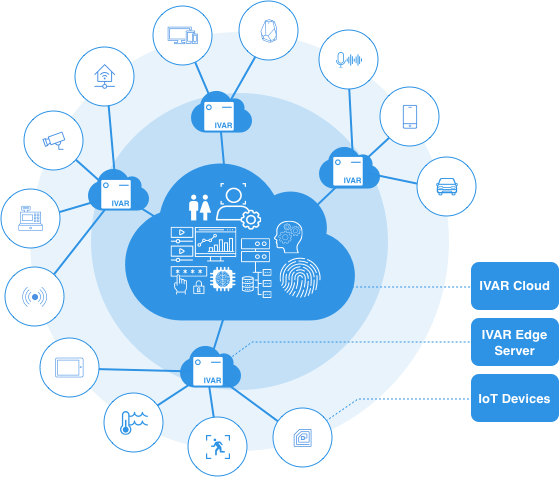

It’s not impossible. To demonstrate this, let’s look at what IVAR™ from Gorilla can do and how it operates.

CPU and GPU Video Analytic Processing

Video analytics as a whole requires a lot of dedicated processing power. We should keep in mind here that before optimization and edge devices with capable CPUs, video analytics was processing both video and analytic data on one machine and required additional GPUs to do most of the work. Technology and the ability to split these two up has advanced to the point that it’s now possible to keep the video data at the edge while pushing the analytic data up the network for quick processing.

One technology, which Gorilla was the first to adopt, is the Intel® distribution of the OpenVINO™ toolkit. Using the OpenVINO™ toolkit to optimize IVAR keeps deployment and upkeep costs low while decreasing operating temperatures by minimizing the need for expensive GPUs.

Delivering and Deploying Video Analytics

Considering the multitude of IVA capabilities and applications in the world today, Gorilla is asked about many things regarding delivering and deploying video analytics and the IVAR platform.

Q: How many video feeds can IVAR handle?

A: IVAR is a highly scalable solution that fits nearly any size system, from a single camera with one IVA to multiple systems with hundreds of cameras running multiple IVAs.

Q: I need a complete VMS with integrated IVA, is IVAR right for my company?

A: From using it as a standalone all-in-one video surveillance solution, to integrating via IVAR’s open API, to adding it to an existing Milestone Xprotect® system, IVAR excels at being versatile in suiting your needs.

Final Thoughts on Video Analytics

The next time you find yourself in a crowded station and need to locate a missing friend (which is hopefully never), think of how a computer attached to a camera might go about doing it. The way that video analytics works is an incredibly interesting and broad topic to cover in one article. If you made it this far in the article, you should now have a solid understanding of how video analytics operates and how video analytic software solutions like IVAR are driving technology forward.

Further Reading on Video Analytics

To read more about edge AI, click here

For more info on IVAR, click here

You’re also welcome to contact us here at any time: Contact Us

If you liked this article, why not share it or leave a comment below? We love conversation and talking about our tech!